Exception in thread “main“ java.lang.NoClassDefFoundError: org/apache/commons/io/Charsets解决方案

在编写客户端程序时,直接用hive的diver class连接hive,或者写spark程序出现异常:Exception in thread "main" java.lang.NoClassDefFoundError: org/apache/commons/io/Charsetsat org.apache.hadoop.security.Credentials.<clinit>(Cre

在编写客户端程序时,直接用hive的diver class连接hive,或者写spark程序出现异常:

Exception in thread "main" java.lang.NoClassDefFoundError: org/apache/commons/io/Charsets

at org.apache.hadoop.security.Credentials.<clinit>(Credentials.java:222)

at org.apache.hadoop.mapred.JobConf.<init>(JobConf.java:334)

at org.apache.spark.rdd.HadoopRDD.getJobConf(HadoopRDD.scala:178)

at org.apache.spark.rdd.HadoopRDD.getPartitions(HadoopRDD.scala:198)

at org.apache.spark.rdd.RDD$$anonfun$partitions$2.apply(RDD.scala:252)

at org.apache.spark.rdd.RDD$$anonfun$partitions$2.apply(RDD.scala:250)

at scala.Option.getOrElse(Option.scala:121)

at org.apache.spark.rdd.RDD.partitions(RDD.scala:250)

at org.apache.spark.rdd.MapPartitionsRDD.getPartitions(MapPartitionsRDD.scala:35)

at org.apache.spark.rdd.RDD$$anonfun$partitions$2.apply(RDD.scala:252)

at org.apache.spark.rdd.RDD$$anonfun$partitions$2.apply(RDD.scala:250)

at scala.Option.getOrElse(Option.scala:121)

at org.apache.spark.rdd.RDD.partitions(RDD.scala:250)

at org.apache.spark.rdd.MapPartitionsRDD.getPartitions(MapPartitionsRDD.scala:35)

at org.apache.spark.rdd.RDD$$anonfun$partitions$2.apply(RDD.scala:252)

at org.apache.spark.rdd.RDD$$anonfun$partitions$2.apply(RDD.scala:250)

at scala.Option.getOrElse(Option.scala:121)

at org.apache.spark.rdd.RDD.partitions(RDD.scala:250)

at org.apache.spark.Partitioner$$anonfun$defaultPartitioner$2.apply(Partitioner.scala:66)

at org.apache.spark.Partitioner$$anonfun$defaultPartitioner$2.apply(Partitioner.scala:66)

at scala.collection.TraversableLike$$anonfun$map$1.apply(TraversableLike.scala:245)

at scala.collection.TraversableLike$$anonfun$map$1.apply(TraversableLike.scala:245)

at scala.collection.immutable.List.foreach(List.scala:381)

at scala.collection.TraversableLike$class.map(TraversableLike.scala:245)

at scala.collection.immutable.List.map(List.scala:285)

at org.apache.spark.Partitioner$.defaultPartitioner(Partitioner.scala:66)

at org.apache.spark.rdd.RDD$$anonfun$groupBy$1.apply(RDD.scala:688)

at org.apache.spark.rdd.RDD$$anonfun$groupBy$1.apply(RDD.scala:688)

at org.apache.spark.rdd.RDDOperationScope$.withScope(RDDOperationScope.scala:151)

at org.apache.spark.rdd.RDDOperationScope$.withScope(RDDOperationScope.scala:112)

at org.apache.spark.rdd.RDD.withScope(RDD.scala:362)

at org.apache.spark.rdd.RDD.groupBy(RDD.scala:687)

at wc.WordCount$.main(WordCount.scala:37)

at wc.WordCount.main(WordCount.scala)

Caused by: java.lang.ClassNotFoundException: org.apache.commons.io.Charsets

at java.net.URLClassLoader.findClass(URLClassLoader.java:382)

at java.lang.ClassLoader.loadClass(ClassLoader.java:418)

at sun.misc.Launcher$AppClassLoader.loadClass(Launcher.java:355)

at java.lang.ClassLoader.loadClass(ClassLoader.java:351)

... 34 more

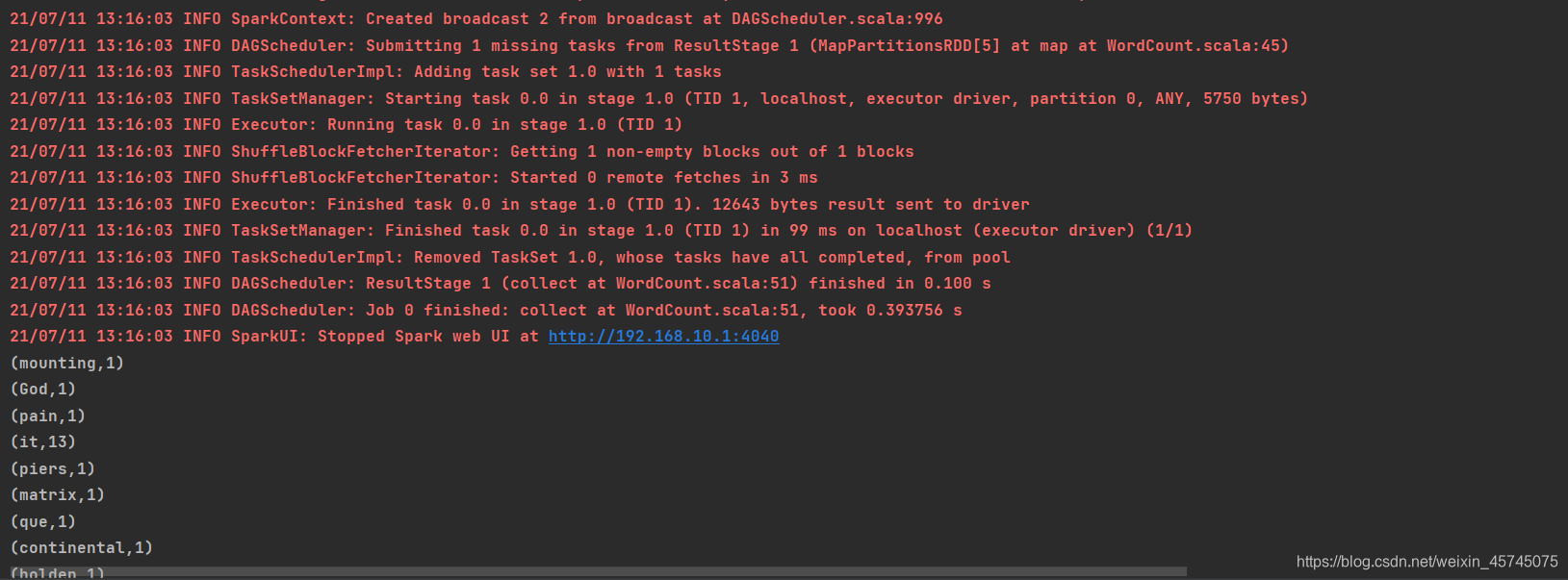

21/07/11 13:11:52 INFO SparkContext: Invoking stop() from shutdown hook

21/07/11 13:11:52 INFO SparkUI: Stopped Spark web UI at http://192.168.10.1:4040

21/07/11 13:11:52 INFO MapOutputTrackerMasterEndpoint: MapOutputTrackerMasterEndpoint stopped!

21/07/11 13:11:52 INFO MemoryStore: MemoryStore cleared

21/07/11 13:11:52 INFO BlockManager: BlockManager stopped

21/07/11 13:11:52 INFO BlockManagerMaster: BlockManagerMaster stopped

21/07/11 13:11:52 INFO OutputCommitCoordinator$OutputCommitCoordinatorEndpoint: OutputCommitCoordinator stopped!

21/07/11 13:11:52 INFO SparkContext: Successfully stopped SparkContext

21/07/11 13:11:52 INFO ShutdownHookManager: Shutdown hook called

21/07/11 13:11:52 INFO ShutdownHookManager: Deleting directory C:\Users\22231\AppData\Local\Temp\spark-e7fbcdb6-065a-445c-ae10-320ab5d2c705

对于这种异常一看就是缺包,缺commons-io包。

但是你可能会发现,一瞬间你也没顺利解决,那是因为commons-io包在2.1版本以前都没有org.apache.commons.io.Charsets方法,版本不对仍然会报此错。

具体从哪个版本开始有的,就懒得去浪费这种时间了解了,解决问题为上。

我这里下载的是2.5版本:http://mvnrepository.com/artifact/commons-io/commons-io/2.5

在pom文件中添加依赖

<dependency>

<groupId>commons-io</groupId>

<artifactId>commons-io</artifactId>

<version>2.5</version>

</dependency>解决:

更多推荐

已为社区贡献1条内容

已为社区贡献1条内容

所有评论(0)