HarmonyOS6 - 鸿蒙录音实时转文字案例

HarmonyOS6 - 鸿蒙录音实时转文字案例

·

HarmonyOS6 - 鸿蒙录音实时转文字案例

开发环境为:

开发工具:DevEco Studio 6.0.1 Release

API版本是:API21本文所有代码都已使用模拟器测试成功!

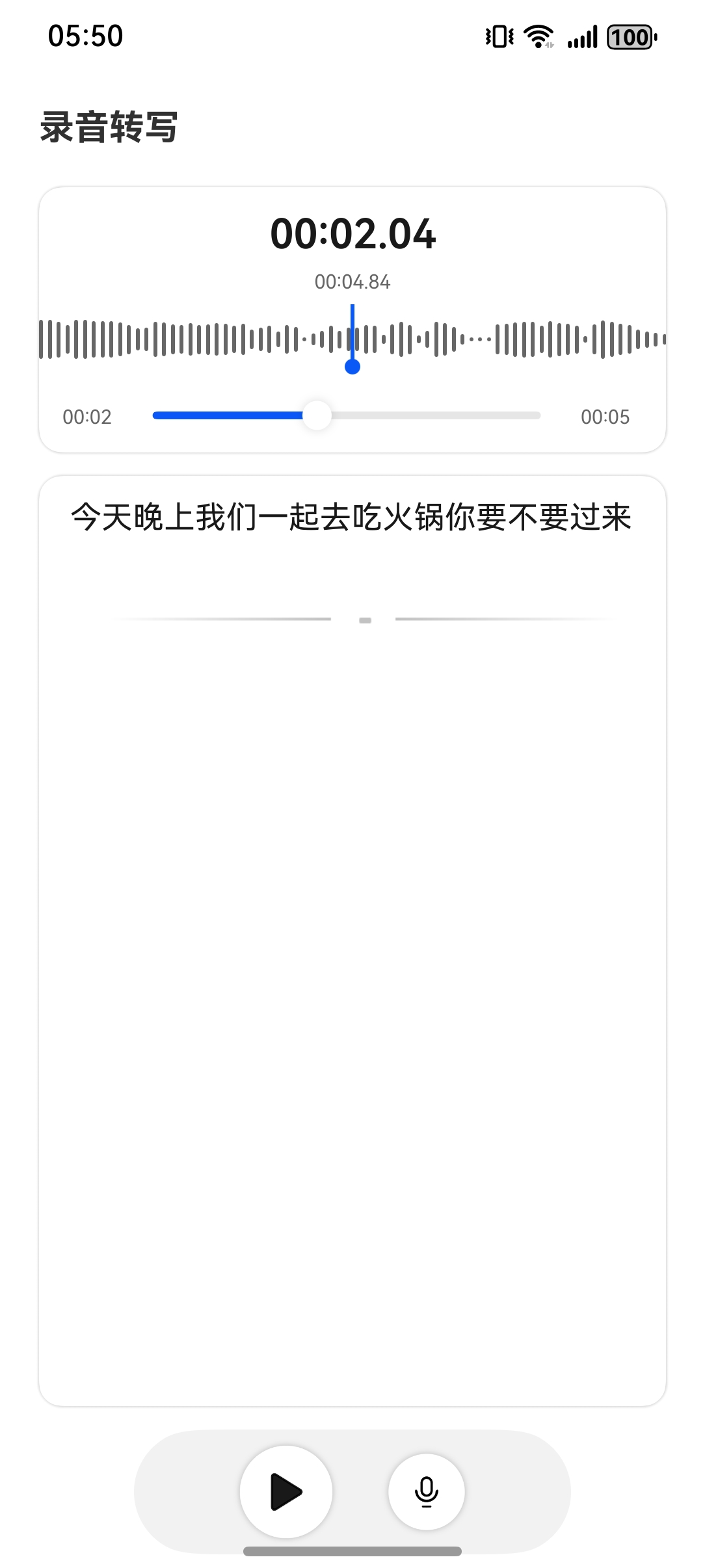

1. 效果

2. 需求

具体需求如下:

- 点击录音按钮,可以进行录音

- 并在录音过程中,可以实时转成文字,显示到页面中

- 并且还可以回放录音,回访过程中可以暂停

- 也可以重新再进行录音

3. 分析

1. 功能需求分析

明确组件核心功能:实时录音、音频播放、语音识别转文字、波形可视化展示、进度控制等。

2. 技术架构设计

- 音频录制:使用AudioCapturer进行音频采集

- 音频播放:使用AudioRendererManager管理音频渲染

- 语音识别:集成SpeechRecognizer实现语音转文字

- 权限管理:麦克风权限申请与检查

3. 状态管理设计

定义组件核心状态变量:

- 录音状态、播放状态

- 识别文本结果

- 波形数据、播放进度

- 音频文件信息

4. UI组件布局设计

- 波形显示区域:实时展示录音/播放波形

- 进度控制条:支持音频播放进度调整

- 文本显示区域:实时显示语音识别结果

- 功能按钮区域:录音、播放、暂停控制

5. 核心功能实现

5.1 录音功能

- 初始化音频捕获器

- 实时计算音频分贝值生成波形

- 同步启动语音识别

5.2 播放功能

- 音频文件加载与渲染

- 进度同步控制

- 波形动画同步更新

5.3 语音识别

- 实时语音识别与结果展示

- 中间结果与最终结果区分

- 关键词高亮显示

6. 交互体验优化

- 波形动画的实时更新

- 进度拖拽的精准控制

- 状态切换的平滑过渡

- 错误提示与权限引导

7. 性能优化

- 波形数据的缓存与更新策略

- 音频数据的流式处理

- 内存管理与资源释放

- 异步操作的时序控制

这个组件实现了完整的音频录制、播放和语音识别流程,通过实时波形可视化增强了用户体验,同时考虑了性能优化和错误处理。

4. 开发

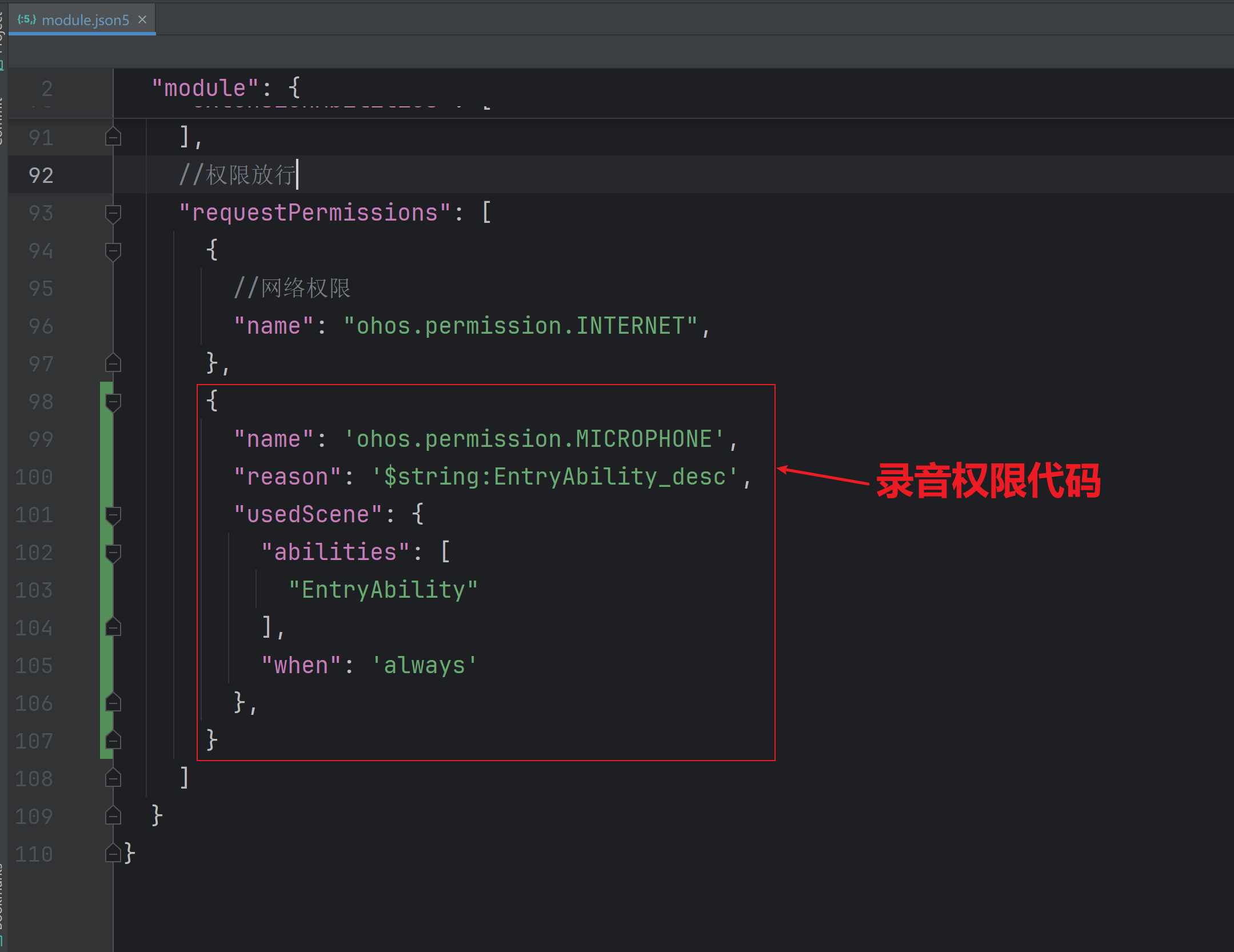

需要录音,必不可少的是麦克风权限,需要在 module.json5 中添加 ohos.permission.MICROPHONE 权限。如下图所示:

代码:

{

"name": 'ohos.permission.MICROPHONE',

"reason": '$string:EntryAbility_desc',

"usedScene": {

"abilities": [

"EntryAbility"

],

"when": 'always'

},

}

页面代码如下:

import { AudioToTextComponent } from '../../component/AudioToTextComponent';

/**

* 录音转写案例

*/

@Entry

@Component

struct AudioToText {

@State message: string = 'Hello World';

build() {

Column() {

Column({ space: 20 }) {

Text('录音转写案例')

.fontSize(18)

.fontWeight(700);

AudioToTextComponent();

}

.alignItems(HorizontalAlign.Start)

.width('100%')

.height('100%')

.padding(20)

.backgroundColor(Color.White);

}

.height('100%')

.width('100%')

}

}

下面是需要用到的一些文件代码

AudioToTextComponent.ets文件代码如下:

import { fileIo as fs } from '@kit.CoreFileKit';

import { Constants } from '../common/Constants';

import { Permissions } from '@kit.AbilityKit';

import { hilog } from '@kit.PerformanceAnalysisKit';

import { SpeechRecognizer } from '../common/utils/SpeechRecognizer';

import { AudioCapturer } from '../common/utils/AudioCapturer';

import { AudioRendererManager } from '../common/utils/AudioRendererManager';

import { findKeyword, FormatString, toTimeStr, toTimeStr2 } from '../common/utils/Utils';

import PermissionsCheck from '../common/utils/PermissionsCheck';

@Component

export struct AudioToTextComponent {

@State text: string = '';

@State filename: string = '';

@State generatedText: string = '';

@State recognitionResult: string = '';

@State fileSize: number = 0;

@State playingIndex: number = 0;

@State isPause: boolean = true;

@State isRecording: boolean = false;

@StorageLink('IsFinal') isFinal: boolean = false;

@State recordingWaveHeights: number[] = [];

@State playingWaveHeights: number[] = [];

@StorageLink('RWOffset') rwOffset: number = 0;

@StorageLink('RecordOffset') recordOffset: number = 0;

@StorageLink('AudioAtEnd') @Watch('stopAudioAtEnd') audioAtEnd: boolean = false;

@State @Watch('result') speechRecognizer: SpeechRecognizer = new SpeechRecognizer();

private permission: Permissions = 'ohos.permission.MICROPHONE';

private intervalId?: number = undefined;

private audioCapturer: AudioCapturer = new AudioCapturer();

private audioRendererMgr: AudioRendererManager = new AudioRendererManager();

result() {

this.generatedText = this.speechRecognizer.generatedText;

this.recognitionResult = this.speechRecognizer.recognitionResult;

}

async aboutToAppear(): Promise<void> {

if (this.audioRendererMgr.rendererState() === undefined) {

await this.audioRendererMgr.initRenderer();

}

if (!this.speechRecognizer.asrEngine) {

this.speechRecognizer.createByCallback();

}

}

stopAudioAtEnd() {

if (this.audioAtEnd) {

this.audioAtEnd = false;

this.audioRendererMgr.pauseRenderer();

if (this.intervalId !== undefined) {

clearInterval(this.intervalId);

}

this.rwOffset = 0;

this.playingWaveHeights = new Array(Constants.WAVE_RECORDING_COUNT).fill(0);

this.playingWaveHeights.push(...this.audioCapturer.getSavedDbData().slice(0, Constants.WAVE_RENDER_COUNT));

this.playingIndex = Constants.WAVE_RENDER_COUNT;

this.isPause = true;

}

}

updateRecordingWaveHeight() {

let h = this.audioCapturer.calculateDecibelHeight();

if (this.recordingWaveHeights.length >= Constants.WAVE_RECORDING_COUNT) {

this.recordingWaveHeights.shift();

}

this.recordingWaveHeights.push(h);

}

build() {

Column({ space: 12 }) {

this.playBuilder();

this.transcriptionBuilder();

this.functionButtons();

}

.height('100%')

.width('100%')

}

// Play the recording

@Builder

playBuilder() {

Column() {

Column({ space: 8 }) {

Column({ space: 6 }) {

Text(this.isRecording ? toTimeStr2(this.recordOffset) : toTimeStr2(this.rwOffset))

.fontSize(21)

.fontWeight(600)

.fontColor('rgba(0, 0, 0, 0.9)')

.textAlign(TextAlign.Center);

if (!this.isRecording) {

Text(toTimeStr2(this.fileSize))

.fontSize(10)

.fontWeight(400)

.fontColor('rgba(0, 0, 0, 0.6)')

.textAlign(TextAlign.Center);

}

}

.height(40)

.width('100%');

Stack() {

this.wavyAnimation();

if (this.isRecording || this.fileSize) {

Image($r('app.media.pointer'))

.width(8)

.height(36);

}

}

.margin({ bottom: 55 });

}

.height(100)

.width('100%')

.margin({ top: 12 });

if (!this.isRecording) {

Row({ space: 5 }) {

Text(toTimeStr(this.rwOffset))

.fontSize(10)

.fontWeight(400)

.fontColor('rgba(0, 0, 0, 0.6)');

Slider({

min: 0,

max: this.fileSize,

value: this.rwOffset,

style: SliderStyle.OutSet

})

.height(4)

.width(230)

.trackThickness(4)

.margin({ bottom: 2 })

.padding({ left: 6, right: 6 })

.blockSize({ width: 15, height: 15 })

.enabled(this.fileSize ? true : false)

.onChange((value: number, mode: SliderChangeMode) => {

let offset = Math.floor(value);

if (offset % (Constants.SAMPLE_BYTE) !== 0) {

offset -= 1;

}

this.audioRendererMgr.setReadOffset(offset);

// Dragging Position Percentage

let percent = offset / this.fileSize;

// Calculate the node positions corresponding to the waveform animation.

let dbData = this.audioCapturer.getSavedDbData();

// The amount of offset required

let offsetCount = Math.floor(dbData.length * percent);

let waves: number[] = new Array(Constants.WAVE_RECORDING_COUNT).fill(0);

waves.push(...dbData);

this.playingWaveHeights = waves.slice(offsetCount, offsetCount + Constants.WAVE_RENDER_COUNT);

this.playingIndex = offsetCount + Constants.WAVE_RENDER_COUNT - Constants.WAVE_RECORDING_COUNT;

this.rwOffset = offset;

hilog.info(0X0000, 'testTag', '%{public}s', mode.toString());

});

Text(toTimeStr(this.fileSize))

.fontSize(10)

.fontWeight(400)

.fontColor('rgba(0, 0, 0, 0.6)');

}

.width('100%')

.padding({ left: 12 });

}

}

.width('100%')

.height(136)

.borderRadius(12)

.backgroundColor(Color.White)

.shadow({

radius: 5,

color: 'rgba(0, 0, 0, 0.25)',

offsetX: 0,

offsetY: 0

});

}

// Wavy Animation

@Builder

wavyAnimation() {

Scroll() {

Row({ space: 2.5 }) {

if (this.isRecording) {

// Animated Recording

Row({ space: 2.5 }) {

ForEach(this.recordingWaveHeights, (height: number) => {

Column()

.width(2)

.height(height)

.backgroundColor('rgba(0, 0, 0, 0.6)')

.borderRadius(4);

});

}

.width('50%')

.height(40)

.justifyContent(FlexAlign.End);

Row({ space: 2.5 }) {

ForEach(Constants.WAVE_RECORD_INDEX, (i: number) => {

Column()

.width(2)

.height(2)

.backgroundColor('rgba(0, 0, 0, 0.6)')

.borderRadius(4)

.onClick(() => {

hilog.info(0X0000, 'testTag', '%{public}s', i);

})

});

}

.width('50%')

.height(40)

.justifyContent(FlexAlign.Start);

} else {

// Play animation

Row({ space: 2.5 }) {

ForEach(this.playingWaveHeights, (height: number) => {

Column()

.width(2)

.height(height)

.backgroundColor('rgba(0, 0, 0, 0.6)')

.borderRadius(4);

});

}

.width('100%')

.height(40)

.justifyContent(FlexAlign.Start);

}

}

.width('100%');

}

.height(20)

.scrollBar(BarState.Off)

.scrollable(ScrollDirection.None);

}

// Transcribe to text

@Builder

transcriptionBuilder() {

Column() {

Column({ space: 12 }) {

if (this.isRecording) {

Row({ space: 4 }) {

Image($r('app.media.loading'))

.width(this.isRecording ? 17 : 8)

.height(this.isRecording ? 16 : 8);

Text('正在识别中')

.fontSize(14)

.fontWeight(400)

.fontColor('rgba(0, 0, 0, 0.9)');

}

.width(this.isRecording ? 120 : 78)

.height(27)

.borderRadius(4)

.padding({ left: 8 })

.backgroundColor('rgba(0, 0, 0, 0.05)');

}

Scroll() {

Text() {

ForEach(findKeyword(this.isFinal ? this.recognitionResult : this.recognitionResult + this.generatedText,

this.text),

(item: FormatString) => {

if (item.isAim) {

Span(item.char)

.fontSize(16)

.fontWeight(400)

.fontColor('#0A59F7'); // Highlight the target content

} else {

Span(item.char)

.fontSize(16)

.fontWeight(400)

.fontColor('rgba(0, 0, 0, 0.9)');

}

});

};

}

.scrollBar(BarState.Off);

if (this.generatedText && !this.isRecording) {

Image($r('app.media.line'))

.height(3)

.width('90%')

.margin({ top: 30, left: 20 });

}

}

.margin({ top: 12 })

.height('50%')

.alignItems(HorizontalAlign.Start);

if (this.text) {

Row() {

Image($r('app.media.up'))

.width(36)

.height(36);

Image($r('app.media.down'))

.width(36)

.height(36);

}

.height(40)

.margin({ top: 50, left: 128 });

}

}

.width('100%')

.layoutWeight(1)

.backgroundColor(Color.White)

.borderRadius(12)

.alignItems(HorizontalAlign.Start)

.padding({ left: 16, right: 16 })

.shadow({

radius: 5,

color: 'rgba(0, 0, 0, 0.25)',

offsetX: 0,

offsetY: 0

});

}

// Function Button

@Builder

functionButtons() {

Column() {

if (!this.isRecording) {

Row({ space: 20 }) {

// Play, Pause Button

Image(this.isPause ? $r('app.media.pause') : $r('app.media.play'))

.width(56)

.height(56)

.enabled(this.fileSize ? true : false)

.onClick(async () => {

if (this.isPause) {

await this.audioRendererMgr.startRenderer(this.getUIContext(), this.filename);

this.intervalId = setInterval(() => {

this.playingWaveHeights.shift();

this.playingIndex++;

let dbData = this.audioCapturer.getSavedDbData();

if (this.playingIndex < dbData.length) {

this.playingWaveHeights.push(dbData[this.playingIndex]);

}

}, Constants.WAVE_TICK_INTERVAL);

this.isPause = false;

} else {

await this.audioRendererMgr.pauseRenderer();

if (this.intervalId !== undefined) {

clearInterval(this.intervalId);

}

this.isPause = true;

}

});

// Start recording

Image($r('app.media.talk'))

.width(48)

.height(48)

.onClick(async () => {

let permissionAllowed = await PermissionsCheck.checkPermissions(this.permission);

if (permissionAllowed) {

if (!this.isRecording) {

if (this.intervalId !== undefined) {

clearInterval(this.intervalId);

this.intervalId = undefined;

}

if (!this.isPause) {

this.audioRendererMgr.pauseRenderer();

this.isPause = true;

}

this.isRecording = true;

this.filename = new Date().getTime().toString();

this.audioCapturer.createOn(this.filename, this.getUIContext());

this.speechRecognizer.createByCallback();

this.speechRecognizer.setListener();

this.speechRecognizer.startRecording();

this.rwOffset = 0;

this.recordOffset = 0;

this.fileSize = 0;

this.recordingWaveHeights = [];

this.intervalId = setInterval(() => {

this.updateRecordingWaveHeight();

}, Constants.WAVE_TICK_INTERVAL);

}

} else {

this.getUIContext().getPromptAction().showToast({

message: '未开启麦克风权限'

});

}

});

}

.width(224)

.height(64)

.borderRadius(32)

.justifyContent(FlexAlign.Center)

.backgroundColor('rgba(0, 0, 0, 0.05)');

} else {

// Stop recording

Image($r('app.media.record'))

.width(56)

.height(56)

.onClick(async () => {

if (this.intervalId !== undefined) {

clearInterval(this.intervalId);

this.intervalId = undefined;

}

this.isRecording = false;

this.speechRecognizer.stop();

await this.audioCapturer.stopAndRelease();

this.playingWaveHeights = new Array(Constants.WAVE_RECORDING_COUNT).fill(0);

this.playingWaveHeights.push(...this.audioCapturer.getSavedDbData().slice(0, Constants.WAVE_RENDER_COUNT));

this.playingIndex = Constants.WAVE_RENDER_COUNT;

if (this.filename) {

let context: Context = this.getUIContext().getHostContext() as Context;

let pathDir = context.cacheDir;

let filePath = pathDir + `/${this.filename}.pcm`;

this.fileSize = fs.statSync(filePath).size;

}

});

}

}

.width('100%')

}

}

SpeechRecognizer文件代码如下:

import { speechRecognizer } from '@kit.CoreSpeechKit';

import { hilog } from '@kit.PerformanceAnalysisKit';

import { BusinessError } from '@kit.BasicServicesKit';

import { AudioCapturer } from './AudioCapturer';

const DOMAIN = 0x0000;

const TAG = 'SpeechRecognizer';

export class SpeechRecognizer {

private sessionId: string = '123456';

private audioCapturer = new AudioCapturer();

asrEngine: speechRecognizer.SpeechRecognitionEngine | undefined = undefined;

// Real-time recognition results

generatedText: string = '';

// Identify the final outcome

recognitionResult: string = '';

// Create an engine and return it via a callback.

createByCallback() {

// Set up the engine creation parameters

let extraParam: Record<string, Object> = { 'locate': 'CN', 'recognizerMode': 'long' };

let initParamsInfo: speechRecognizer.CreateEngineParams = {

language: 'zh-CN',

online: 1,

extraParams: extraParam

};

// Call the createEngine method

speechRecognizer.createEngine(initParamsInfo, (err: BusinessError, speechRecognitionEngine:

speechRecognizer.SpeechRecognitionEngine) => {

if (!err) {

hilog.info(DOMAIN, TAG, 'succeeded in creating engine.');

// Receive the instance of the creation engine

this.asrEngine = speechRecognitionEngine;

this.setListener();

} else {

/**

* Error code 1002200001 returned when unable to create the engine,reason:

* failure to create the engine due to unsupported language, unsupported mode, initialization timeout,

* non-existent resources, etc.

*/

/**

* Error code 1002200006 is returned when the engine cannot be created, reason:

* the engine is currently busy,

* typically triggered when multiple applications simultaneously invoke the speech recognition engine.

*/

/**

* Error code 1002200008 is returned when the engine cannot be created, reason:The engine has been destroyed.

*/

hilog.error(DOMAIN, TAG, `Failed to create engine. Message: ${err.message}.`);

}

});

}

startListeningForRecording() {

let audioParam: speechRecognizer.AudioInfo = {

audioType: 'pcm',

sampleRate: 16000,

soundChannel: 1,

sampleBit: 16

};

let extraParam: Record<string, Object> = {

'recognitionMode': 0,

'vadBegin': 500,

'vadEnd': 10000,

// Maximum audio duration supported for recognition

'maxAudioDuration': 8 * 60 * 60 * 1000

};

let recognizerParams: speechRecognizer.StartParams = {

sessionId: this.sessionId,

audioInfo: audioParam,

extraParams: extraParam

};

hilog.info(DOMAIN, TAG, 'startListening start');

this.asrEngine?.startListening(recognizerParams);

}

// Microphone Voice to Text

async startRecording() {

try {

this.startListeningForRecording();

// Recording to obtain audio

let data: ArrayBuffer;

hilog.info(DOMAIN, TAG, 'create capture success');

this.audioCapturer.setDataCallback((dataBuffer: ArrayBuffer) => {

hilog.info(DOMAIN, TAG, 'start write');

hilog.info(DOMAIN, TAG, 'ArrayBuffer ' + JSON.stringify(dataBuffer));

data = dataBuffer;

let uint8Array: Uint8Array = new Uint8Array(data);

hilog.info(DOMAIN, TAG, 'ArrayBuffer uint8Array ' + JSON.stringify(uint8Array));

// Write audio stream

this.asrEngine?.writeAudio(this.sessionId, uint8Array);

});

} catch (err) {

this.generatedText = `Message: ${err.message}.`;

}

}

// Timing

async countDownLatch(count: number) {

while (count > 0) {

await this.sleep(40);

count--;

}

}

// Sleep

sleep(ms: number): Promise<void> {

return new Promise(resolve => setTimeout(resolve, ms));

}

// Set Callback

setListener() {

// Create callback object

let setListener: speechRecognizer.RecognitionListener = {

// Start successful callback recognition

onStart: (sessionId: string, eventMessage: string) => {

this.generatedText = '';

this.recognitionResult = '';

hilog.info(DOMAIN, TAG, `onStart, sessionId: ${sessionId} eventMessage: ${eventMessage}`);

},

// Event Callback

onEvent(sessionId: string, eventCode: number, eventMessage: string) {

hilog.info(DOMAIN, TAG,

`onEvent, sessionId: ${sessionId} eventCode: ${eventCode} eventMessage: ${eventMessage}`);

},

// Callback for recognition results, including intermediate and final outcomes

onResult: (sessionId: string, result: speechRecognizer.SpeechRecognitionResult) => {

hilog.info(DOMAIN, TAG, `onResult, sessionId: ${sessionId} sessionId: ${JSON.stringify(result)}`);

if (result.isFinal) {

this.recognitionResult += result.result;

}

this.generatedText = result.result;

AppStorage.setOrCreate('IsFinal', result.isFinal);

},

// Callback upon recognition completion

onComplete(sessionId: string, eventMessage: string) {

hilog.info(DOMAIN, TAG, `onComplete, sessionId: ${sessionId} eventMessage: ${eventMessage}`);

},

// Error callback, error code returned via this method

/**

* Return error code 1002200002, recognition failed at startup,

* triggered when restarting the startListening method.

*/

// For more error codes, please refer to the Error Code Reference.

onError(sessionId: string, errorCode: number, errorMessage: string) {

hilog.error(DOMAIN, TAG,

`onError, sessionId: ${sessionId} errorCode: ${errorCode} errorMessage: ${errorMessage}`);

},

};

// Set Callback

try {

// Set Callback

this.asrEngine?.setListener(setListener);

hilog.info(DOMAIN, TAG, `已设置监听回调`);

} catch (e) {

hilog.error(DOMAIN, TAG, `设置监听回调失败`);

}

}

// Stop recognition

stop() {

this.asrEngine?.shutdown();

}

}

AudioCapturer文件代码如下:

import { audio } from '@kit.AudioKit';

import { BusinessError } from '@kit.BasicServicesKit';

import { hilog } from '@kit.PerformanceAnalysisKit';

import { fileIo } from '@kit.CoreFileKit';

import { Constants } from '../Constants';

const DOMAIN = 0x0000;

const TAG = 'AudioCapturer';

interface GeneratedObjectLiteralInterface_1 {

samplingRate: audio.AudioSamplingRate;

channels: audio.AudioChannel;

sampleFormat: audio.AudioSampleFormat;

encodingType: audio.AudioEncodingType;

}

interface GeneratedObjectLiteralInterface_2 {

source: audio.SourceType;

capturerFlags: number;

}

interface GeneratedObjectLiteralInterface_3 {

streamInfo: GeneratedObjectLiteralInterface_1;

capturerInfo: GeneratedObjectLiteralInterface_2;

}

export class AudioCapturer {

private sampleValCnt: number = 0;

private sampleValSum: number = 0;

private savedDbs: number[] = [];

private audioCapturer: audio.AudioCapturer | undefined = undefined;

// Audio Data Callback Method

private dataCallBack: ((data: ArrayBuffer) => void) | null = null;

private fd?: number = 0;

private url: string = '';

getSavedDbData(): number[] {

return this.savedDbs;

}

calculateDecibelHeight(): number {

if (this.sampleValCnt === 0) {

return 0;

}

let rms = this.sampleValSum / this.sampleValCnt;

let db = Math.max(Constants.MIN_DB, Math.min(0, 20 * Math.log10(rms)));

this.sampleValCnt = 0;

this.sampleValSum = 0;

let res = Math.max(2, (db + Math.abs(Constants.MIN_DB)) / Math.abs(Constants.MIN_DB) * Constants.WAVE_HEIGHT_RADIO);

this.savedDbs.push(res);

return res;

}

// Audio stream information

private audioStreamInfo: GeneratedObjectLiteralInterface_1 = {

samplingRate: audio.AudioSamplingRate.SAMPLE_RATE_16000,

channels: audio.AudioChannel.CHANNEL_1,

sampleFormat: audio.AudioSampleFormat.SAMPLE_FORMAT_S16LE,

encodingType: audio.AudioEncodingType.ENCODING_TYPE_RAW

};

// Audio collector information

private audioCapturerInfo: GeneratedObjectLiteralInterface_2 = {

source: audio.SourceType.SOURCE_TYPE_MIC,

capturerFlags: 0

};

// Audio Collector Option Information

private audioCapturerOptions: GeneratedObjectLiteralInterface_3 = {

streamInfo: this.audioStreamInfo,

capturerInfo: this.audioCapturerInfo

};

// Create an instance and start listening

createOn(filename: string, context: UIContext) {

audio.createAudioCapturer(this.audioCapturerOptions, async (err, data) => {

if (err) {

hilog.error(DOMAIN, TAG, `Invoke createAudioCapturer failed, code is ${err.code}, message is ${err.message}`);

} else {

hilog.info(DOMAIN, TAG, 'Invoke createAudioCapturer succeeded.示例创建成功');

this.audioCapturer = data;

this.savedDbs = [];

let bufferSize: number = 0;

class Options {

offset?: number;

length?: number;

}

let contact = context.getHostContext() as Context;

let path = contact.cacheDir;

let filePath = path + `/${filename}.pcm`;

let file: fileIo.File = await fileIo.open(filePath, fileIo.OpenMode.READ_WRITE | fileIo.OpenMode.CREATE);

this.fd = file.fd;

this.url = 'fd://' + file.fd;

hilog.info(DOMAIN, 'testTag', '%{public}s', this.url);

let readDataCallback = async (buffer: ArrayBuffer) => {

let options: Options = {

offset: bufferSize,

length: buffer.byteLength

};

await fileIo.write(file.fd, buffer, options);

bufferSize += buffer.byteLength;

AppStorage.setOrCreate('RecordOffset', bufferSize);

let samples = new Int16Array(buffer);

for (let i = 0; i < samples.length; i++) {

let val = samples[i] / Constants.VOLUME_MAX;

this.sampleValSum += val * val;

this.sampleValCnt += 1;

}

};

this.dataCallBack = readDataCallback;

this.audioCapturer.on('readData', readDataCallback);

this.audioCapturer.on('stateChange', (state: audio.AudioState) => {

if (state === audio.AudioState.STATE_RELEASED) {

fileIo.close(file);

}

});

hilog.info(DOMAIN, TAG, '开启监听成功');

this.startRecording();

}

});

}

setDataCallback(dataCallBack: (data: ArrayBuffer) => void) {

this.dataCallBack = dataCallBack;

hilog.info(DOMAIN, 'testTag', '%{public}s', this.dataCallBack.length);

}

// start recording

startRecording() {

if (!this.audioCapturer) {

hilog.warn(DOMAIN, TAG, 'AudioCapturer not initialized yet.');

return;

}

this.audioCapturer?.start().then(() => {

hilog.info(DOMAIN, TAG, '开始录音');

}).catch((err: BusinessError) => {

hilog.error(DOMAIN, TAG, '录制录音' + err.code + err.message);

});

}

// stop recording

async stopAndRelease() {

if (this.audioCapturer) {

try {

await this.audioCapturer.stop();

await this.audioCapturer.release();

if (this.fd !== undefined) {

await fileIo.close(this.fd);

this.fd = undefined;

}

} catch (err) {

hilog.error(DOMAIN, TAG, '录音停止失败' + err.code + err.message);

}

}

}

}

Constants常量类文件代码如下:

import { audio } from '@kit.AudioKit';

export class Constants {

static MIN_DB = -90;

static VOLUME_MAX = 32768;

static DRAW_LINE_WIDTH = 2;

static WAVE_HEIGHT_RADIO = 30;

static WAVE_RECORDING_COUNT = 25;

static WAVE_TICK_INTERVAL = 50;

static WAVE_RENDER_COUNT = 100;

static CHANNEL_NUM = audio.AudioChannel.CHANNEL_1;

static SAMPLING_RATE = audio.AudioSamplingRate.SAMPLE_RATE_16000;

static SAMPLE_FORMAT = audio.AudioSampleFormat.SAMPLE_FORMAT_S16LE;

static SAMPLE_BIT = 16;

static SAMPLE_BYTE = Constants.SAMPLE_BIT / 8;

static WAVE_RECORD_INDEX: number[] = new Array(Constants.WAVE_RECORDING_COUNT).fill(0);

}

AudioRendererManager文件代码如下:

import { audio } from '@kit.AudioKit';

import { fileIo } from '@kit.CoreFileKit';

import { hilog } from '@kit.PerformanceAnalysisKit';

import { Constants } from '../Constants';

import { audioStateString } from '../utils/Utils';

const DOMAIN = 0x0000;

const TAG = 'AudioRendererManager';

export class AudioRendererManager {

private playFile?: fileIo.File;

private fileSize: number = 0;

private readOffset: number = 0;

private sampleValCnt: number = 0;

private sampleValSum: number = 0;

private renderer?: audio.AudioRenderer;

setReadOffset(offset: number) {

this.readOffset = offset;

this.renderer?.flush();

}

calculateDecibel(): number {

if (this.sampleValCnt === 0) {

return 0;

}

let rms = this.sampleValSum / this.sampleValCnt;

let db = Math.max(Constants.MIN_DB, Math.min(0, 20 * Math.log10(rms)));

this.sampleValCnt = 0;

this.sampleValSum = 0;

return (db + Math.abs(Constants.MIN_DB)) / Math.abs(Constants.MIN_DB);

}

rendererState(): audio.AudioState | undefined {

if (this.renderer !== undefined) {

return this.renderer.state;

}

return undefined;

}

async initRenderer() {

let audioStreamInfo: audio.AudioStreamInfo = {

channels: Constants.CHANNEL_NUM,

samplingRate: Constants.SAMPLING_RATE,

sampleFormat: Constants.SAMPLE_FORMAT,

encodingType: audio.AudioEncodingType.ENCODING_TYPE_RAW,

};

let audioRendererInfo: audio.AudioRendererInfo = {

rendererFlags: 0,

usage: audio.StreamUsage.STREAM_USAGE_MUSIC,

};

let audioRendererOptions: audio.AudioRendererOptions = {

streamInfo: audioStreamInfo,

rendererInfo: audioRendererInfo,

};

this.renderer = await audio.createAudioRenderer(audioRendererOptions);

this.renderer.on('stateChange', (state: audio.AudioState) => {

hilog.info(DOMAIN, TAG, `Audio renderer state changed: ${audioStateString(state)}`);

});

this.renderer.on('writeData', (buffer: ArrayBuffer) => {

let lastLen = this.fileSize - this.readOffset;

let readLen = lastLen >= buffer.byteLength ? buffer.byteLength : lastLen;

fileIo.readSync(this.playFile?.fd, buffer, { offset: this.readOffset, length: readLen });

this.readOffset += readLen;

AppStorage.setOrCreate('RWOffset', this.readOffset);

if (this.readOffset >= this.fileSize) {

AppStorage.setOrCreate('AudioAtEnd', true);

this.readOffset = 0;

}

let samples = new Int16Array(buffer);

for (let i = 0; i < samples.length; i++) {

let val = samples[i] / Constants.VOLUME_MAX;

this.sampleValSum += val * val;

this.sampleValCnt += 1;

}

});

}

async startRenderer(context: UIContext, fileName?: string) {

if (this.renderer === undefined) {

throw new Error(`Realse AudioRederer at undefined state`);

}

let state = this.renderer.state;

if (state === audio.AudioState.STATE_INVALID) {

this.renderer = undefined;

throw new Error(`Start AudioRenderer at invalid state.`);

}

if (state !== audio.AudioState.STATE_PREPARED && state !== audio.AudioState.STATE_STOPPED &&

state !== audio.AudioState.STATE_PAUSED) {

throw new Error(`Start AudioRenderer at wrong state, ${audioStateString(state)}`);

}

if (fileName) {

let contact = context.getHostContext() as Context;

let pathDir = contact.cacheDir;

let filePath = pathDir + `/${fileName}.pcm`;

if (this.playFile?.path !== filePath) {

if (this.playFile) {

await fileIo.close(this.playFile);

await this.renderer.flush();

}

this.playFile = await fileIo.open(filePath, fileIo.OpenMode.READ_ONLY);

}

this.fileSize = fileIo.statSync(filePath).size;

}

await this.renderer.start();

}

async pauseRenderer() {

if (this.renderer === undefined) {

throw new Error(`Realse AudioRenderer at undefined state`);

}

let state = this.renderer.state;

if (state === audio.AudioState.STATE_INVALID) {

this.renderer = undefined;

throw new Error(`Pause AudioRenderer at invalid state.`);

}

if (state !== audio.AudioState.STATE_RUNNING) {

throw new Error(`Pause AudioRenderer at wrong state, ${audioStateString(state)}`);

}

await this.renderer.pause();

}

async stopRenderer() {

if (this.renderer === undefined) {

throw new Error(`Realse AudioRenderer at undefined state`);

}

let state = this.renderer.state;

if (state === audio.AudioState.STATE_INVALID) {

this.renderer = undefined;

throw new Error(`Stop AudioRenderer at invalid state.`);

}

if (state !== audio.AudioState.STATE_RUNNING && state !== audio.AudioState.STATE_PAUSED) {

throw new Error(`Stop AudioRenderer at wrong state, ${audioStateString(state)}`);

}

await this.renderer.stop();

fileIo.closeSync(this.playFile?.fd);

}

async releaseRenderer() {

if (this.renderer === undefined) {

throw new Error(`Realse AudioRenderer at undefined state`);

}

let state = this.renderer.state;

if (state === audio.AudioState.STATE_INVALID) {

this.renderer = undefined;

throw new Error(`Release AudioRenderer at invalid state.`);

}

if (state !== audio.AudioState.STATE_PAUSED && state !== audio.AudioState.STATE_STOPPED &&

state !== audio.AudioState.STATE_PREPARED) {

throw new Error(`Release AudioRenderer at wrong state, ${audioStateString(state)}`);

}

await this.renderer.release();

}

}

Utils文件代码如下:

import { audio } from '@kit.AudioKit';

import { fileIo } from '@kit.CoreFileKit';

import { hilog } from '@kit.PerformanceAnalysisKit';

import { Constants } from '../Constants';

import { audioStateString } from '../utils/Utils';

const DOMAIN = 0x0000;

const TAG = 'AudioRendererManager';

export class AudioRendererManager {

private playFile?: fileIo.File;

private fileSize: number = 0;

private readOffset: number = 0;

private sampleValCnt: number = 0;

private sampleValSum: number = 0;

private renderer?: audio.AudioRenderer;

setReadOffset(offset: number) {

this.readOffset = offset;

this.renderer?.flush();

}

calculateDecibel(): number {

if (this.sampleValCnt === 0) {

return 0;

}

let rms = this.sampleValSum / this.sampleValCnt;

let db = Math.max(Constants.MIN_DB, Math.min(0, 20 * Math.log10(rms)));

this.sampleValCnt = 0;

this.sampleValSum = 0;

return (db + Math.abs(Constants.MIN_DB)) / Math.abs(Constants.MIN_DB);

}

rendererState(): audio.AudioState | undefined {

if (this.renderer !== undefined) {

return this.renderer.state;

}

return undefined;

}

async initRenderer() {

let audioStreamInfo: audio.AudioStreamInfo = {

channels: Constants.CHANNEL_NUM,

samplingRate: Constants.SAMPLING_RATE,

sampleFormat: Constants.SAMPLE_FORMAT,

encodingType: audio.AudioEncodingType.ENCODING_TYPE_RAW,

};

let audioRendererInfo: audio.AudioRendererInfo = {

rendererFlags: 0,

usage: audio.StreamUsage.STREAM_USAGE_MUSIC,

};

let audioRendererOptions: audio.AudioRendererOptions = {

streamInfo: audioStreamInfo,

rendererInfo: audioRendererInfo,

};

this.renderer = await audio.createAudioRenderer(audioRendererOptions);

this.renderer.on('stateChange', (state: audio.AudioState) => {

hilog.info(DOMAIN, TAG, `Audio renderer state changed: ${audioStateString(state)}`);

});

this.renderer.on('writeData', (buffer: ArrayBuffer) => {

let lastLen = this.fileSize - this.readOffset;

let readLen = lastLen >= buffer.byteLength ? buffer.byteLength : lastLen;

fileIo.readSync(this.playFile?.fd, buffer, { offset: this.readOffset, length: readLen });

this.readOffset += readLen;

AppStorage.setOrCreate('RWOffset', this.readOffset);

if (this.readOffset >= this.fileSize) {

AppStorage.setOrCreate('AudioAtEnd', true);

this.readOffset = 0;

}

let samples = new Int16Array(buffer);

for (let i = 0; i < samples.length; i++) {

let val = samples[i] / Constants.VOLUME_MAX;

this.sampleValSum += val * val;

this.sampleValCnt += 1;

}

});

}

async startRenderer(context: UIContext, fileName?: string) {

if (this.renderer === undefined) {

throw new Error(`Realse AudioRederer at undefined state`);

}

let state = this.renderer.state;

if (state === audio.AudioState.STATE_INVALID) {

this.renderer = undefined;

throw new Error(`Start AudioRenderer at invalid state.`);

}

if (state !== audio.AudioState.STATE_PREPARED && state !== audio.AudioState.STATE_STOPPED &&

state !== audio.AudioState.STATE_PAUSED) {

throw new Error(`Start AudioRenderer at wrong state, ${audioStateString(state)}`);

}

if (fileName) {

let contact = context.getHostContext() as Context;

let pathDir = contact.cacheDir;

let filePath = pathDir + `/${fileName}.pcm`;

if (this.playFile?.path !== filePath) {

if (this.playFile) {

await fileIo.close(this.playFile);

await this.renderer.flush();

}

this.playFile = await fileIo.open(filePath, fileIo.OpenMode.READ_ONLY);

}

this.fileSize = fileIo.statSync(filePath).size;

}

await this.renderer.start();

}

async pauseRenderer() {

if (this.renderer === undefined) {

throw new Error(`Realse AudioRenderer at undefined state`);

}

let state = this.renderer.state;

if (state === audio.AudioState.STATE_INVALID) {

this.renderer = undefined;

throw new Error(`Pause AudioRenderer at invalid state.`);

}

if (state !== audio.AudioState.STATE_RUNNING) {

throw new Error(`Pause AudioRenderer at wrong state, ${audioStateString(state)}`);

}

await this.renderer.pause();

}

async stopRenderer() {

if (this.renderer === undefined) {

throw new Error(`Realse AudioRenderer at undefined state`);

}

let state = this.renderer.state;

if (state === audio.AudioState.STATE_INVALID) {

this.renderer = undefined;

throw new Error(`Stop AudioRenderer at invalid state.`);

}

if (state !== audio.AudioState.STATE_RUNNING && state !== audio.AudioState.STATE_PAUSED) {

throw new Error(`Stop AudioRenderer at wrong state, ${audioStateString(state)}`);

}

await this.renderer.stop();

fileIo.closeSync(this.playFile?.fd);

}

async releaseRenderer() {

if (this.renderer === undefined) {

throw new Error(`Realse AudioRenderer at undefined state`);

}

let state = this.renderer.state;

if (state === audio.AudioState.STATE_INVALID) {

this.renderer = undefined;

throw new Error(`Release AudioRenderer at invalid state.`);

}

if (state !== audio.AudioState.STATE_PAUSED && state !== audio.AudioState.STATE_STOPPED &&

state !== audio.AudioState.STATE_PREPARED) {

throw new Error(`Release AudioRenderer at wrong state, ${audioStateString(state)}`);

}

await this.renderer.release();

}

}

PermissionsCheck文件代码如下:

import { abilityAccessCtrl, bundleManager, Permissions } from '@kit.AbilityKit';

import { BusinessError } from '@kit.BasicServicesKit';

import { hilog } from '@kit.PerformanceAnalysisKit';

class PermissionsCheck {

// Verify whether the application is authorized

async checkPermissions(permission: Permissions) {

let grantStatus: abilityAccessCtrl.GrantStatus = await this.checkAccessToken(permission);

if (grantStatus !== abilityAccessCtrl.GrantStatus.PERMISSION_GRANTED) {

return false;

}

return true;

}

async checkAccessToken(permission: Permissions): Promise<abilityAccessCtrl.GrantStatus> {

let atManager: abilityAccessCtrl.AtManager = abilityAccessCtrl.createAtManager();

let grantStatus: abilityAccessCtrl.GrantStatus = abilityAccessCtrl.GrantStatus.PERMISSION_DENIED;

// Obtain the accessTokenID for the application

let tokenId: number = 0;

try {

let bundleInfo: bundleManager.BundleInfo =

await bundleManager.getBundleInfoForSelf(bundleManager.BundleFlag.GET_BUNDLE_INFO_WITH_APPLICATION);

let appInfo: bundleManager.ApplicationInfo = bundleInfo.appInfo;

tokenId = appInfo.accessTokenId;

grantStatus = await atManager.checkAccessToken(tokenId, permission);

} catch (error) {

let err: BusinessError = error as BusinessError;

hilog.error(0x000, 'testTag', `Failed to check access token ${err.code}, message is ${err.message}`);

}

return grantStatus;

}

}

export default new PermissionsCheck();

【EntryAbility.ets】文件中的【onWindowStageCreate】方法中需要新增下面代码:

let atManager = abilityAccessCtrl.createAtManager();

atManager.requestPermissionsFromUser(this.context, ['ohos.permission.MICROPHONE']).then((data) => {

}).catch((err: BusinessError) => {

});

然后就可以使用真机测试了

最后

- 希望本文对你有所帮助!

- 本人如有任何错误或不当之处,请留言指出,谢谢!

更多推荐

已为社区贡献17条内容

已为社区贡献17条内容

所有评论(0)